Chat with Deepseek on your Mac via the browser

LLM models that run on your local machine are becoming better and better. Indeed, thanks to the AI arms race, these models will soon be good enough for many tasks required by individuals and companies looking to leverage AI. I’ve already covered how to install an LLM locally using Ollama, but how do you chat with the model in a browser? Enter WebUI.

Installing WebUI

WebUI is an open source project that describes itself as

an extensible, self-hosted AI interface that adapts to your workflow, all while operating entirely offline

Their first recommended installation option, using Docker, didn’t work for me, but luckily they provide a guide on how to install it via the new python package manager uv.

Getting started is as simple as opening your terminal and pasting this in:

DATA_DIR=~/.open-webui uvx --python 3.11 open-webui@latest serveThe easiest way to chat with a local model is probably LM Studio. It provides a GUI that allows users to download models via a point-and-click interface.. I’m more interested in an option that can become part of a product and is more customisable.

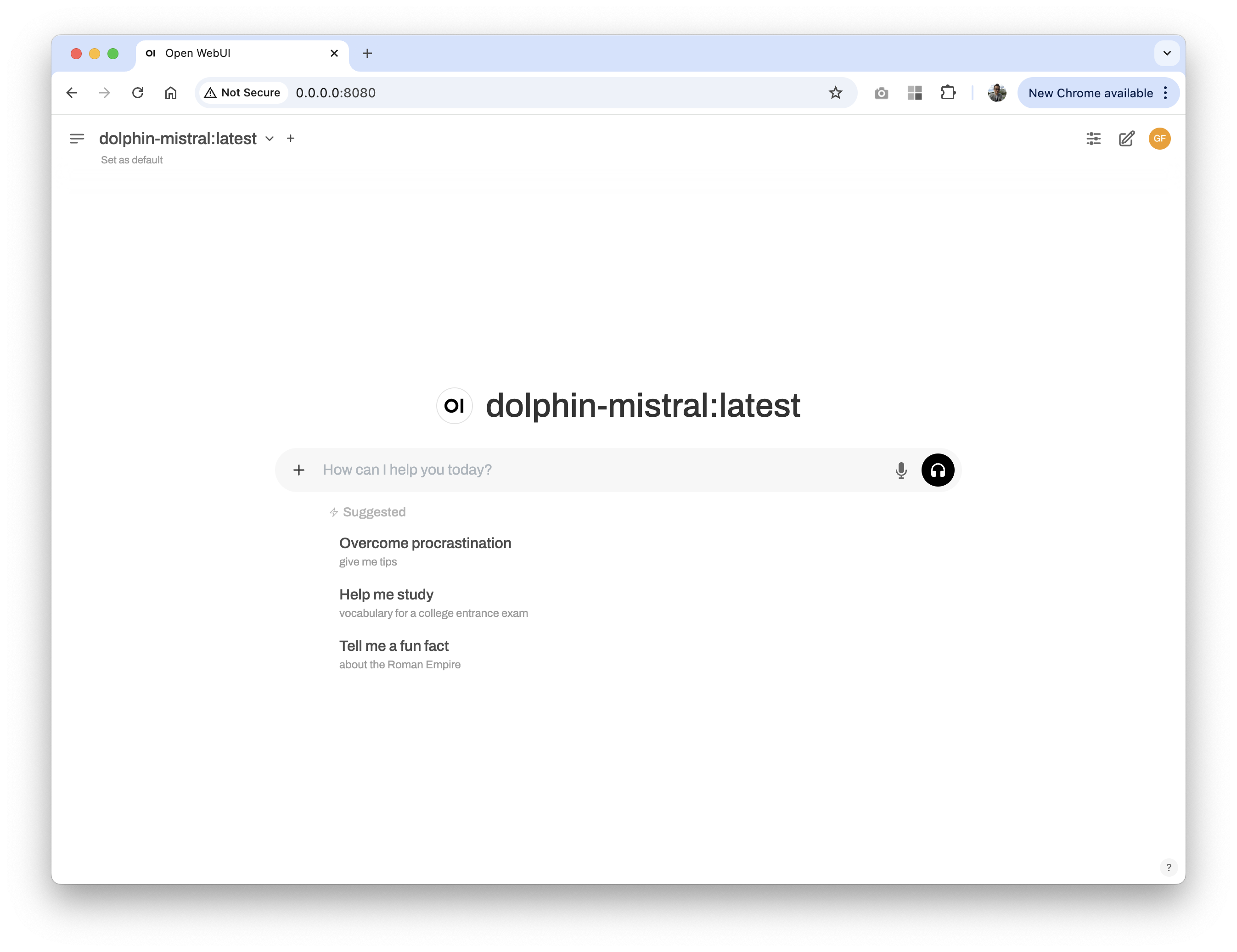

It’s alive - launching WebUI

After waiting for everything to download, the server is running.

Pull the Deepseek model with Ollama

WebUI defaults to the mistral model I have on my machine, so we need to download Deepseek.

ollama pull deepseek-r1This defaults to the 7 billion parameter model. My Mac could probably handle the 32 billion one but this will do for now. Next, we switch to Deepseek and upload some PDF documents.

Chatting about PDF documents

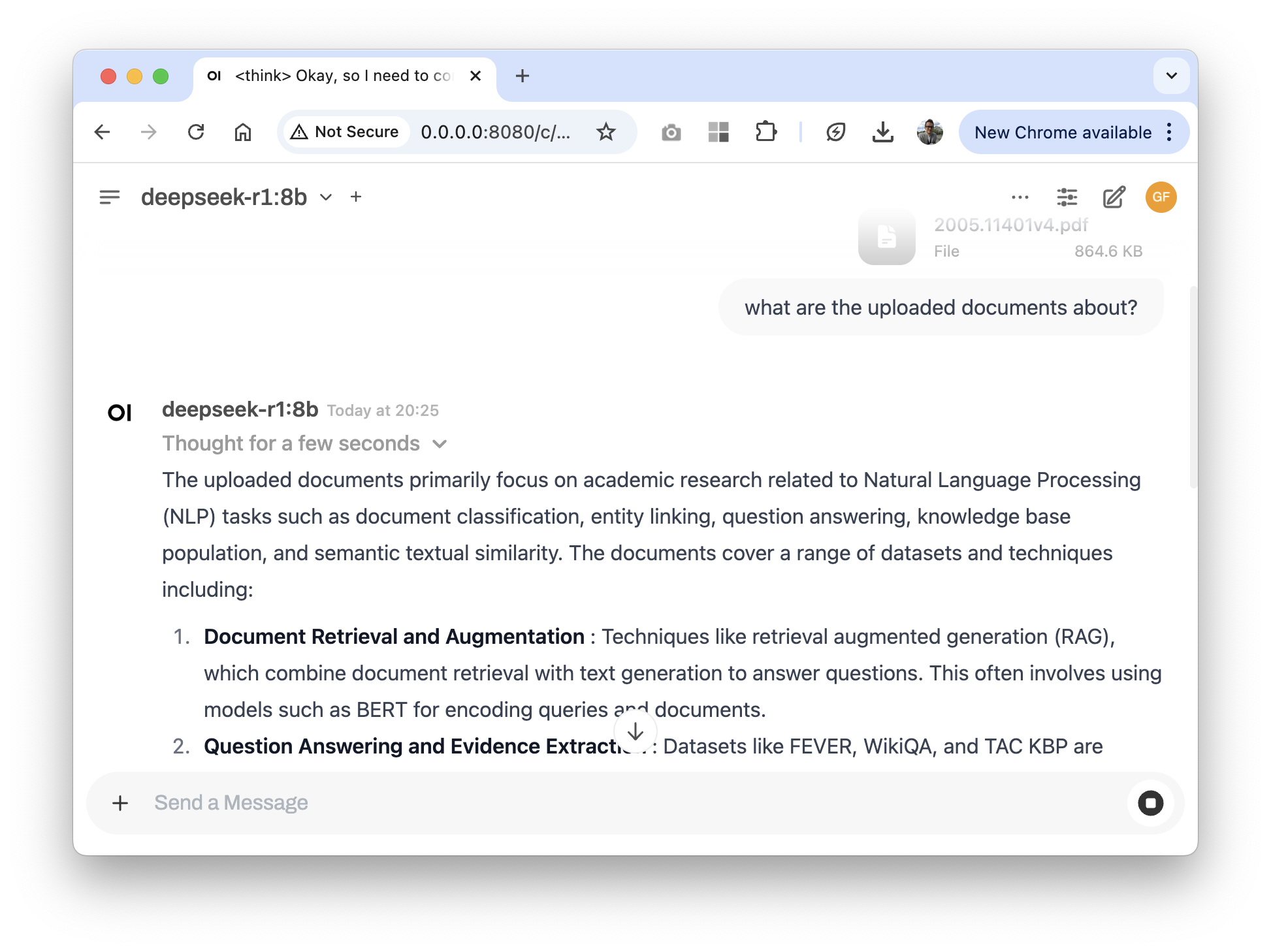

I uploaded four documents from the RAG section of Latent Space’s The 2025 AI Engineer Reading List and asked:

what are the uploaded documents about?

The LLM running on my Mac, Deepseek’s 8 billion parameter model, did a fair job of telling me what the uploaded documents are about.

Then I asked:

which one of the documents should I look at to know more about benchmarking?This was less successful. The model just gave me general advice on what to look for, rather than point out the Massive Text Embedding Benchmark paper.

Final thoughts

Setting up my own (albeit far less capable version) of ChatGPT on my Mac took me 30-60 minutes. WebUI seems to have user management, a plugin architecture and lots more, and it’s easy to imagine it running on someone’s intranet, connected to documents that can’t be shared with the outside world. As the private/local models become more and more powerful, the day every business runs their own internal chat AI is certainly approaching.