Command Line Tool for Code Reviews with an AI Model Running Locally

Since I am too impatient to wait for access to CoPilot powered code reviews, I wrote a command-line tool that fetches pull requests from GitHub and code reviews them with AI.

I’m also an enthusiast for running LLM models locally, so the tool allows users to select either models from OpenAI or local models installed with Ollama.

Introducing: llm-code-review

Install

pip install llm-code-reviewHow to use

llm-code-review [repo-owner]/[repo] [PR number]For example, to get a code review from the first ever pull request to the repo that for this tool, run:

llm-code-review geirfreysson/llm-code-review 1Run without installing with uv

You can skip the pip install step if you have Astral’s uv installed.

uvx llm-code-review geirfreysson/llm-code-review 1Run with local LLM

If you don’t fancy sending your code to the cloud, you can use a local model, powered by Ollama.

llm-code-review geirfreysson/llm-code-review 1 --model ollama:deepseek-r1:8bPipe your results into LLMs

One cool thing about the command line is that you can pipe the result of the code review into Simon Willison’s LLM tool. Just call llm with the parameter --system along with your prompt.

$ uvx llm-code-review geirfreysson/llm-code-review 1 | llm --system "Is this fix absolutely critical, i.e. will it break something, answer only with critical or not critical"

Not criticalNo critical comments on the pull request. That’s something.

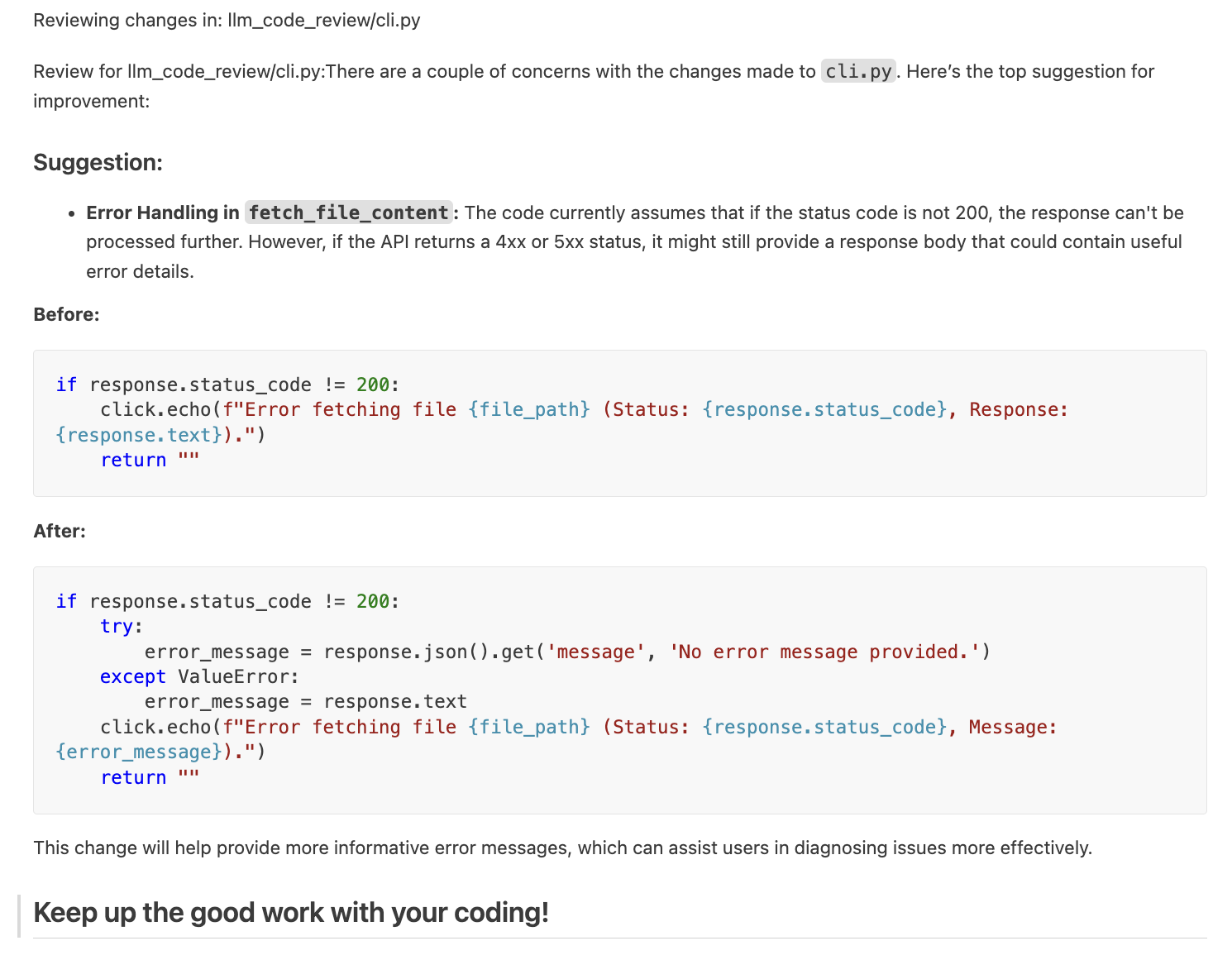

Output from the code review

The output is an looks something like this: